7 Deadliest Plagues in History

COVID-19 has claimed more than 5 million lives, but as it turns out, estimates are

COVID-19 has claimed more than 5 million lives, but as it turns out, estimates are

At this moment, there are still a few COVID-19 variants going around in the US.

Have you ever considered that there were even more dangerous viruses before COVID-19? The COVID-19

Are Covid Vaccinations really THAT Safe? The Covid-19 pandemic has had devastating effects across the

We should all know that the proper preparation of food is probably the most important

Since COVID-19 entered our lives, we found ourselves looking for several ways to protect ourselves

Infectious diseases are definitely one of the most frightening weapons that ever existed. Just the

We all know that if you want to lose a few extra pounds, especially after

At the beginning of the global coronavirus pandemic, our nation’s top infectious disease expert, Dr.

As the latest wave of the coronavirus pandemic sweeps across our nation, helped by the

A donation of seed grain from the United States to Iraq was wrongly consumed by

Thalidomide was launched by Celgene Corporation to help pregnant women. Before it was withdrawn three

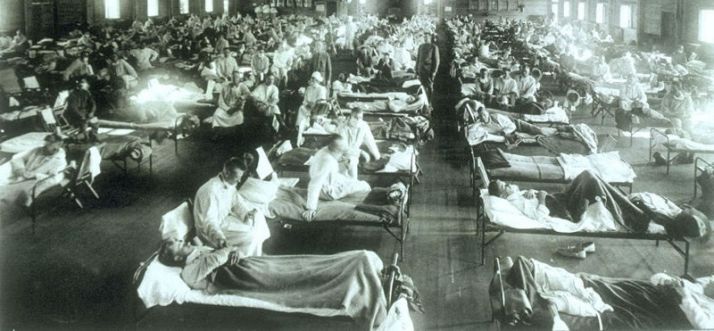

The disease spread from places where there were high concentrations of soldiers. It spread all

The Asian Bubonic Plague, known as the Black Death, hits London. No one knows why

Bubonic Plague from Asia arrived via Africa at Constantinople in 542 In 542, Roman Emperor

The American Society for Microbiology (ASM) discusses with the U.S. Congress issues related to the

A pandemic is an outbreak of an infectious disease that spreads across a large region,

In 2008, Zimbabwe’s suffering people had a glimmer of hope to lighten the dark decades

Do you think it’s ok to shoot the leaders of America? We all know what

How clean is the air you breathe? According to a new report, a mere seven

Can the crime rate be reduced in any of these cities? Living in cities with

Chicago is a destination known for its summer fun, sports, entertainment, food scene, diversity, and